Getting Started: Computer-Use Agents with the AskUI Python SDK

Learn how to build computer-use agents with the AskUI Python SDK. Run your first VisionAgent (agent.act/agent.get), then make runs debuggable and repeatable with Tool Store tools like screenshots, file I/O, and LoadImageTool.

2026 Strategy: Testing HTML5 Canvas with Computer Use Agents

Stop failing to test HTML5 Canvas. Learn how AI vision agents (like AskUI) see inside the "black box" that traditional tools can't.

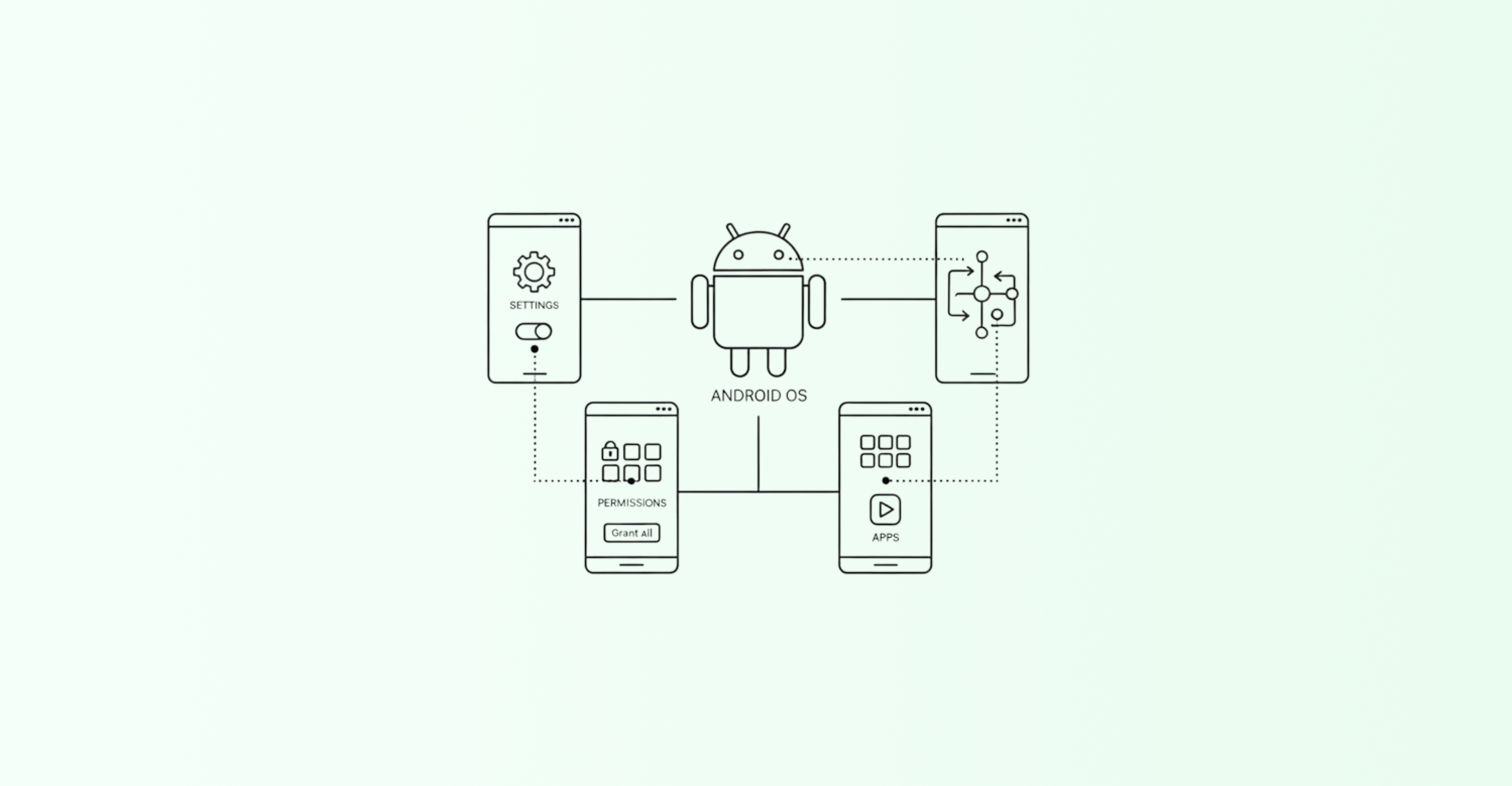

Top 10 Agentic AI Tools for Android Testing in 2026

A 2026-ranked comparison of agentic AI systems for Android testing using AndroidWorld Pass@1 results, plus enterprise-ready guidance on OS-level autonomous QA.

Testing the "Invisible" Enterprise: Why Computer Use Agents are Key to DOM-Free Automation

Enterprise software built on Qt, WPF, and Canvas remains invisible to traditional DOM-based automation. Discover how AskUI’s Computer Use Agents enable resilient, DOM-free automation across desktop and virtualized environments.

The 60/40 Profit Trap: Defending Margins in Fixed-Price HMI Projects

Fixed-price HMI projects quietly lose margins to fragile automation maintenance. The real profit killer is the Maintenance Tax and how agentic, intent-driven automation can eliminate it.

Zero-Shot Scalability: One Test Logic, Ten Different OEM UIs

UI fragmentation across OEM brands forces Tier-1 suppliers to duplicate and maintain the same test logic multiple times. AskUI’s Zero-Shot Scalability enables a single abstracted test logic to validate diverse automotive UIs instantly using Agentic AI.

Beyond Jest: Scaling Enterprise Automation with AskUI Agentic AI

In 2026, enterprise automation is shifting from brittle scripts to Agentic AI. Learn how AskUI’s vision-based Computer Use Agents eliminate selector debt and power intent-driven QA at scale.

Understanding AskUI: The Eyes and Hands of AI Agents

AskUI turns AI from thinking into doing by separating planning (Vision Agent) from execution (Agent OS) and enabling vision-first control across real operating systems.

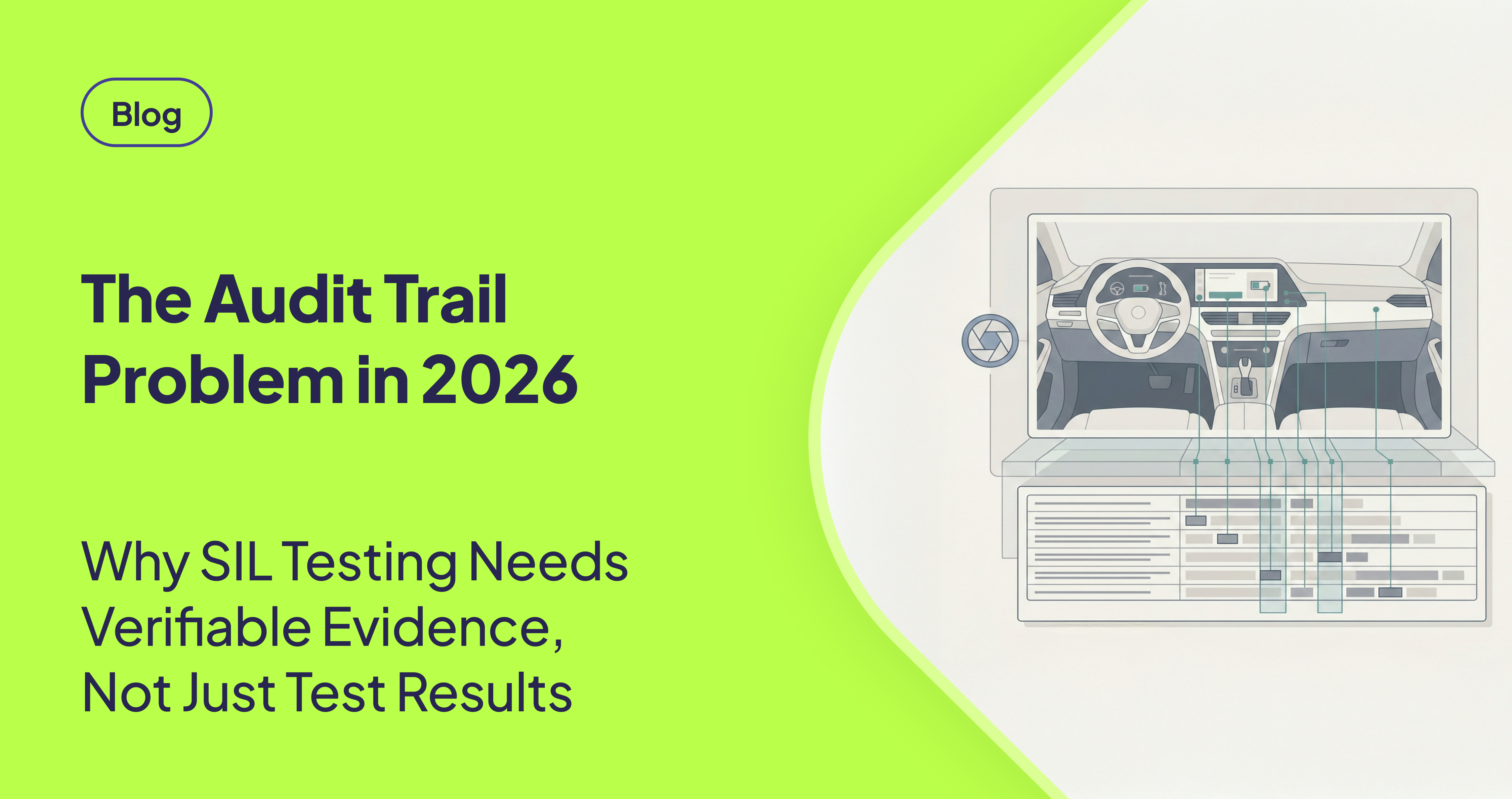

The Audit Trail: Verifiable Evidence for Automotive Compliance

In 2026, automotive compliance is defined by proof, not test results. This article explains how agentic AI enables deterministic traceability between HMI behavior and system logs to generate audit-ready evidence in SIL testing.

Deep Functional Navigation: State Machine Validation via Agentic AI

As HMI logic evolves into high-density state machines, click-and-verify testing breaks down. This article explains how agentic AI enables deep functional navigation by reasoning through UI-visible states and validating complex decision paths in real time.

Integrated System Logic: Synchronizing the Cockpit

Isolated display tests miss integration bugs like time desync and logic mismatches in modern digital cockpits. This post explains cross-layer verification in SIL and how multi-agent orchestration can validate time-aligned behavior across Cluster and CID.

Adaptive Resilience: Intent-Driven Testing for OTA Cycles

OTA updates break brittle, coordinate-based tests in SDV HMI stacks. Adaptive Resilience shifts automation from fixed pixels to functional intent, enabling self-healing execution across layout changes and mixed platforms like QNX and Android.