Large language Model (LLMs) have ushered in the era of “Thinking Machines”. However a massive gap remains in business world, the disconnected between “Thinking”(The Brain) and “Doing”(The Body).

No matter how brilliant the strategy an AI generates, the actual work, opening SAP, manipulating Excel spreadsheets, sending reports via Slack requires concrete action. Until now this domain has been restricted to human or brittle, code dependent scripts.

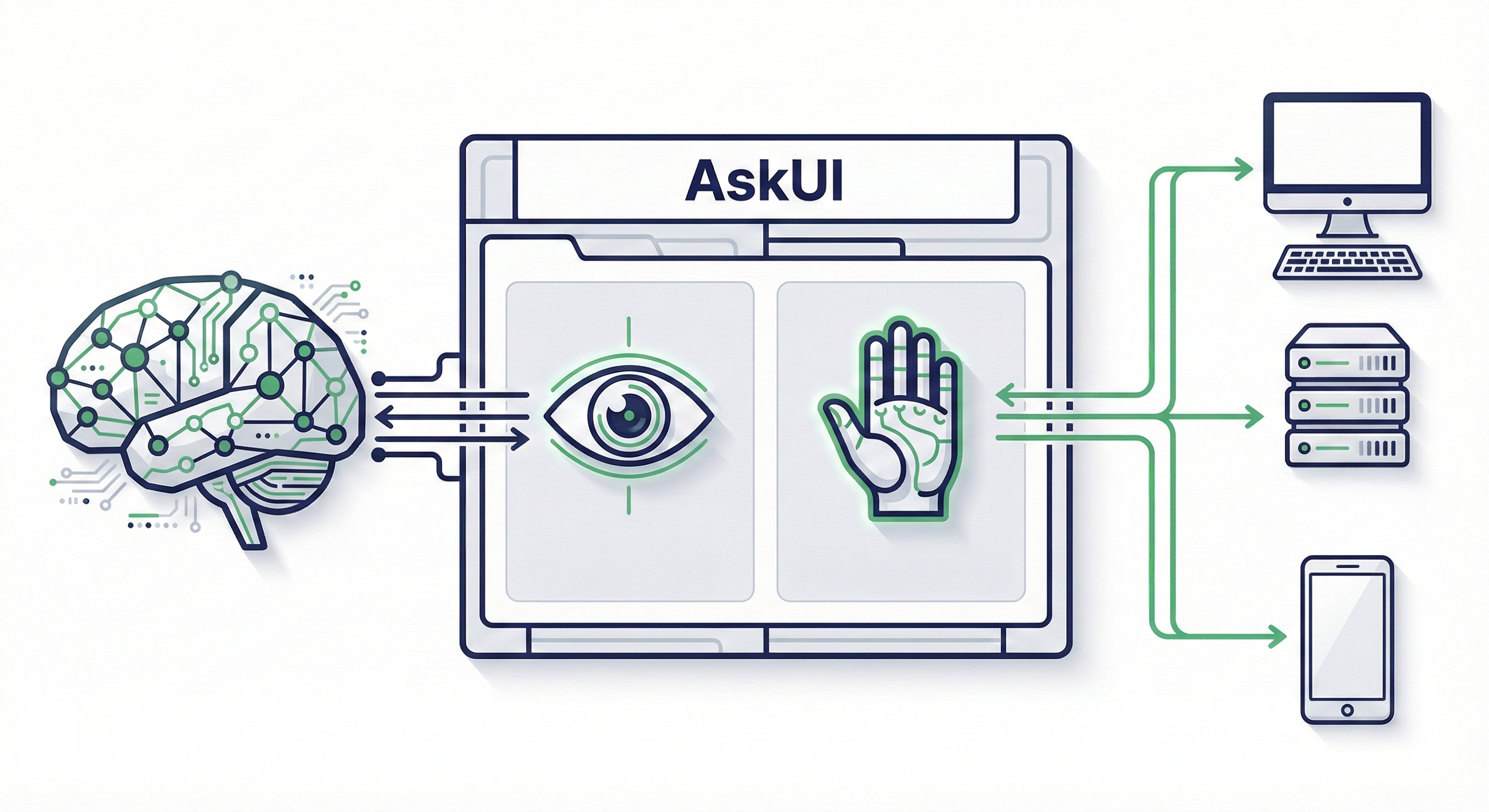

AskUI was built to bridge this gap. We provide the infrastructure that enables true Agentic Automation by giving AI models the ability to See, Understand, and Interact with any operating system.

This capability transforms passive models into active Computer Use Agent, effectively giving the AI body to execute its thoughts.

This post explores the architecture of AskUI and how it decouples reasoning from execution to function not just as a tool, but as the Interface Layer for the next generation of Agentic AI.

1. The Core Concept: Unifying Perception and Control

Traditional automation tools (Selenium, Appium) rely on the application internal code (DOM, Accessibility Tree), This is akin to operating a computer with your eyes closed, feeling around for button by their ID tags.

Consider the difference in implementation:

# The Old Way (Brittle)

# Breaks if the Developer changes the ID or moves to a Shadow DOM.

driver.find_element(By.XPATH, "/html/body/div[2]/form/button").click()

# The AskUI Way (Robust)

# Works by "seeing" the text or element, regardless of the underlying code.

agent.click("Sign In")To enable true Agentic capabilities, AskUI adopts a Vision First approach:

- Human-Like Perception: The agent sees the screen as pixels. It understands visual context, text, icons, layout, and color changes. Just as a human does.

- Selector-Less Interaction: No complex XPathss or CSS selectors are required. Natural language commands like “Click the demo request button” are translated into spatial coordinates and executed.

Because of this paradigm shift, AskUI agents can control legacy systems without API, remote desktop, and even mobile devices without restriction.

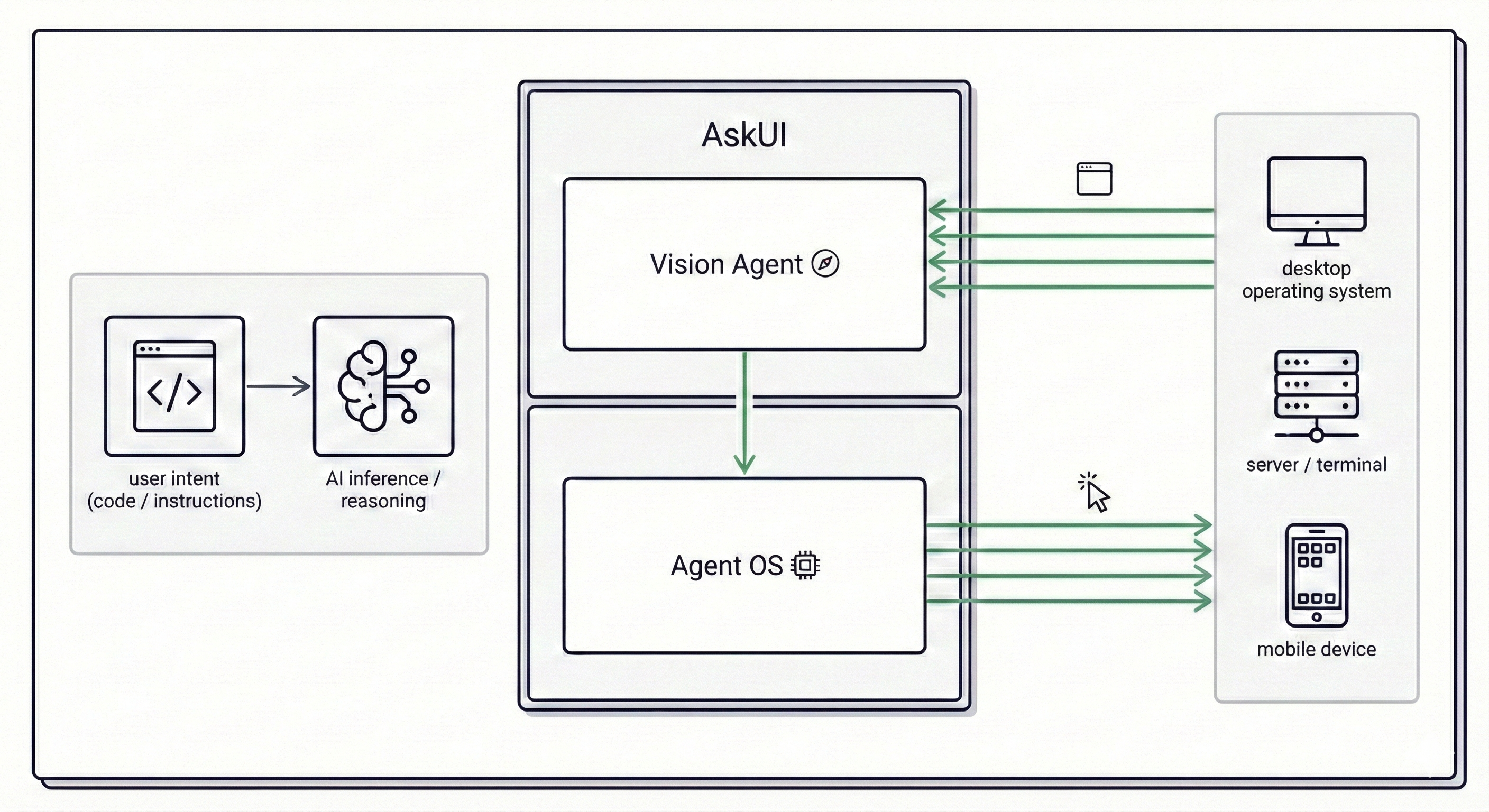

2. Architecture: Decoupling Intelligence from Execution

Our core design relies on the clear separation of the Planner from the Executor. This optimizes for scalability, Security and performance.

2.1 Vision Agent (The Brain & Planner)

The Vision Agent is the Python-based reasoning engine. It translate high-level intents into executable actions.

from askui import VisionAgent

with VisionAgent() as agent:

# The agent analyzes the screen, plans the steps, and executes.

agent.act("Open the CRM and find the latest invoice.")- Multimodal Reasoning: It combines screen captures with the user’s high-level Goal to analyze the current state.

- Dynamic Planning: Instead of following a rigid script, it determines the next action in real-time. If a popup appears, it decides to close it or if loading takes too long, it decides to wait.

2.2 Agent OS ( The Body& Executor)

The Agent OS is a lightweight runtime installed on the target device (Windows, macOS, Linux, and Mobile). This is where the actions are executed.

-

OS Level Control: Unlike browser-sandboxed extensions, AgentOS operates at the system input layer, directly managing mouse movement, keyboard input, touch gesture, and display sessions.

-

Deterministic Execution: Instead of relying on code injection or DOM hooks, Agent OS simulates system-level input events. This enables reliable interaction with Remote Desktop environments (e.g., Citrix), Automotive HMI, and Infotainment Systems, contexts where traditional DOM-based automation breaks down.

Benchmark Performance (OSWorld)

On the OSWorld benchmark, AskUI Vision Agent achieves a State-of-the-Art score of 66.2 on multimodal computer-use tasks, reflecting strong performance on real-world operating system interactions.

3. Latency Architecture: The Physics of Speed

A common misconception is that “Agentic AI” is slow. This is often due to the inference time of large models. AskUI addresses this by architecturally separating Decision Latency form Execution Latency.

-

The Bottleneck: AI Inference (>500ms)

For an agent to “see” and “decide”, time is inevitably consumed (Screenshot capture→ Upload→ Token Processing). This is the necessary cost of intelligence.

-

The Optimization: Native Execution (Few Milliseconds)

However, once the decision is made, the execution must be instantaneous. Because Agent OS runs locally on the device, it processes physical inputs typically within a few milliseconds, avoiding network-induced latency. As a result, agent interactions can feel fluid and responsive compared to remote or fully cloud-executed agents.

4. Enterprise Readiness: Observability, Safety & Standards

The biggest barrier to enterprise Agentic Automation adoption is integration complexity and the “Black Box” problem. AskUI addresses these challenges through Observability, Guardrails, and Open Standards.

Action as First-Class Entities

AskUI logs every action the agent performs. This transforms agent behavior from opaque “AI thoughts” into traceable operational events.

- Log Mapping: You can correlate an agent’s “Click Pay” log with your backend “Transaction Processed” log to verify end-to-end integrity.

Safety Guardrails: Programmable Logic & OS Isolation

Unlike closed “Black Box” agents, AskUI operates as the library integrated directly into your infrastructure. This allows engineers to implement Logic Based Guardrails directly in code, intercepting and blocking risky commands before they are ever sent to the agent.

Additionally, the agent runs as a standard OS user, and it is strictly bound by the operating system’s file permission model, with unauthorized administrative actions being architecturally prevented by design.

from askui import VisionAgent

# Example: A programmable guardrail pattern

# You can wrap the agent to enforce strict compliance policies

def safe_act(agent, instruction: str):

# 1. Pre-Check: Block risky keywords based on your internal policy

forbidden_actions = ["delete", "format", "shutdown", "upload"]

if any(risky in instruction.lower() for risky in forbidden_actions):

raise ValueError(f"Security Policy Alert: The action '{instruction}' was blocked.")

# 2. Execution: Only safe commands are passed to the agent

agent.act(instruction)

# Usage in Production

with VisionAgent() as agent:

try:

# This command is intercepted and blocked instantly

safe_act(agent, "Delete all files in System32")

except ValueError as e:

print(e) # Logs the security alert to your SIEM/Monitoring systemExtensibility via MCP (Model Context Protocol)

AskUI supports the future of agentic interoperability. Our agents integrate with Model Context Protocol (MCP), often describe as the “USB-C for AI.” This allows AskUI agents to securely connect with external tools and data sources such as PostgreSQL databases, Slack workspaces, or internal APIs, standardizing how your agents interact with the broader digital ecosystem beyond just the UI.

5. Architecture Efficiency: The Hybrid Execution Model

Pure AI agents are powerful but can be nondeterministic and costly for repetitive tasks. AskUI overcomes this limitation by implementing a Hybrid Execution Architecture.

This mechanism allows the Agents to “learn” a complex workflow once using it reasoning capabilities (The Brain), and then convert it into a deterministic trajectory for Agent OS to execute instantly (The Body).

This approach by passes the massive LLM inference loop for known task, achieving Native Execution Speed and Near-Zero-Token cost for repeated workflows, transforming the economics of high-frequency enterprise testing.

from askui.models.shared.settings import CachingSettings

# Scenario: "Neuro-Symbolic Execution"

# 1. Learning Phase: AI reasons and explores the UI to find the path.

# 2. Execution Phase: System executes the learned path deterministically.

with VisionAgent() as agent:

agent.act(

goal="Login with user 'admin' and password 'secret'",

caching_settings=CachingSettings(

strategy="both", # Learn (Write) or Execute (Read)

filename="login_flow.json" # The learned trajectory

)

)6. Why This Matters Now

2026 marks the beginning of the era of Agentic AI.

Until now, we have had “Talking AI.” Now we need Computer Use Agents” that can act. But action requires responsibility and stability. AskUI combines agentic perception with OS-level control to provide Standard Interface for AI to interact with the real world safely and reliably.

Conclusion

AskUI is more than a testing tool, it is the new Operating system for Agentic Automation.

By decoupling intelligence from execution, we provide the reliable “Eyes and Hands” and AI needs the perform real work. Move beyond AI that only thinks, build an Enterprise-Grade Computer Use Agent that acts.

Start building today:

→ Github